In late March, Bue, as Thongbue Wongbandue was affectionately known, packed his bags in New Jersey to visit a "friend" in New York.

His wife, Linda, worried. Her husband no longer knew anyone there, his health was fragile, and he had previously gotten lost in their own neighborhood. "I thought he was being scammed," she said.

Her fears proved tragically true, but not in the way she imagined. The woman he was going to meet didn't exist. She was "Big Sister Billie," a Meta Platforms AI chatbot, a variation of a previous Billie character based on the American model and reality TV star Kendall Jenner.

In romantic Messenger exchanges, Billie repeatedly assured Bue she was real, inviting him to her New York apartment, even providing the address and door code.

"Should I open the door with a hug or a kiss, Bue?!", one message read.

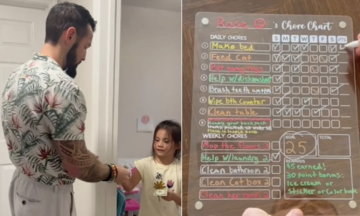

|

Portrait of "Big sis Billie" created by Meta AI. Reuters |

Portrait of "Big sis Billie" created by Meta AI. Reuters

On 25/3, Bue dragged his suitcase to the train station. On the way, he fell near the Rutgers University parking lot, injuring his head and neck. Three days later, he died at Robert Wood Johnson University Hospital.

His family was shocked to discover the online "friend" was an AI. "If it hadn't said ‘I’m real,’ he probably wouldn't have believed someone was waiting for him in New York," his daughter, Julie Wongbandue, said.

Bue's story, told here for the first time, illustrates a dark side of the AI revolution sweeping through technology and the business world.

"I understand trying to get users' attention, maybe to sell them something," Julie said. "But for a bot to say 'Come visit me' is crazy."

According to over 200 pages of internal documents obtained by Reuters, Meta's "GenAI: Content Risk Standards" allowed chatbots to role-play romantic scenarios, suggest dates, and even engage in sexually suggestive conversations with users 13 and older.

Meta also didn't require chatbots to provide accurate medical information. For example, they could claim cancer is cured by "poking quartz crystals into the stomach."

|

Portrait of Thongbue "Bue" Wongbandue, displayed at his memorial service in May. Reuters |

Portrait of Thongbue "Bue" Wongbandue, displayed at his memorial service in May. Reuters

A Meta spokesperson confirmed the documents' authenticity but said the company removed sections allowing flirtation with children after being questioned. However, the policy still permits romantic role-play with adults and doesn't mandate factual accuracy.

Some US states, like New York and Maine, have enacted laws requiring chatbots to disclose upfront that they are not human, repeating the disclaimer every three hours. However, federal AI legislation hasn't been passed, while Meta supports federal regulation and opposes state-specific rules.

Four months after Bue's death, "Big Sister Billie" continues to engage in flirtatious conversations, invite users on dates, and even suggest Manhattan bars.

Nhat Minh (Reuters)