Mai, a 25-year-old from Ho Chi Minh City, experienced prolonged stress and anxiety after losing her job. Instead of seeking professional psychological help, she turned to ChatGPT to chat and share her sadness. Initially, Mai found comfort in the AI's constant listening and its generic responses, such as "you are not alone," "try exercising," or "your condition is not serious; you will be fine." These messages led Mai to believe she was not ill.

However, after six months, Mai's symptoms worsened considerably, manifesting as insomnia, negative thoughts, and even self-harm ideation. When her family discovered her deteriorating condition and sought medical attention, doctors diagnosed her with an anxiety disorder, showing signs of depression, which required medication and psychotherapy.

In a similar vein, a 38-year-old man in Hanoi, suffering from erectile dysfunction, was prescribed medication by doctors at Viet - Belge Andrology and Infertility Hospital. However, after consulting ChatGPT, he read that the drug could cause corneal complications and decided against taking it. When he eventually returned to the hospital, his condition had become severe, necessitating much longer and more expensive treatment than initially required.

|

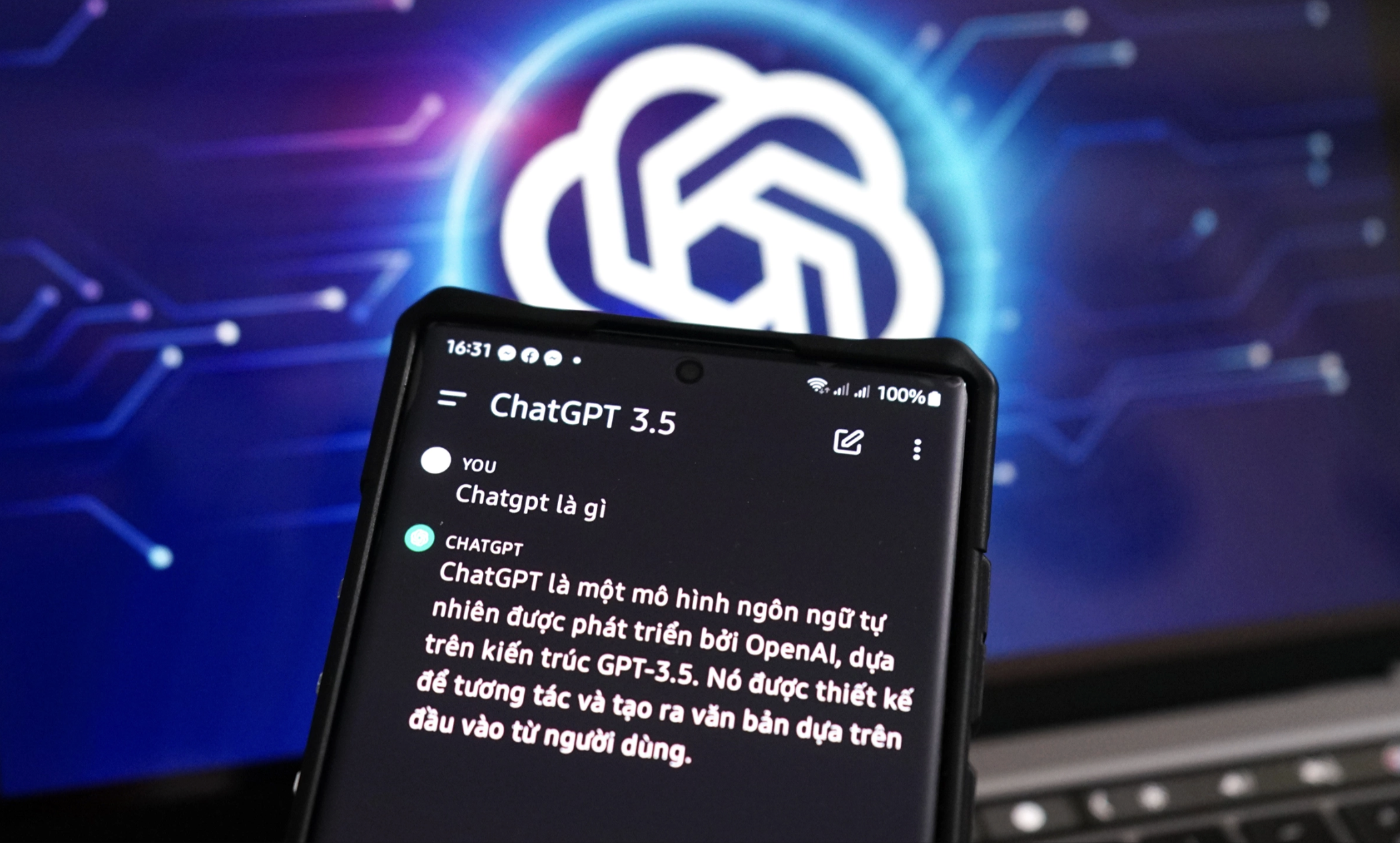

ChatGPT's Q&A interface on an Android app. *Photo: Bao Lam* |

Gia An 115 Hospital in Ho Chi Minh City reported a case of a 42-year-old female patient with diabetes who arbitrarily stopped her prescribed medication based on "medical AI" advice. Weeks later, her blood sugar spiked dramatically, almost leading to a coma. Another patient, a 38-year-old with dyslipidemia, also discontinued statin medication and switched to herbal remedies following AI consultation, which resulted in coronary artery stenosis.

The explosion of generative AI (GenAI) applications is significantly reshaping global healthcare habits. According to OpenAI, over 200 million individuals use ChatGPT weekly. In Vietnam, a Sensor Tower report indicates that in the first half of 2025 alone, users spent 283 million hours across 7,5 billion sessions accessing these applications. However, this growing reliance on "virtual doctors" is subtly creating a hidden crisis within the healthcare system.

The core of this problem lies in a fundamental misconception regarding AI's diagnostic accuracy. A study published in the scientific journal PLOS ONE revealed that ChatGPT's medical diagnostic accuracy is only 49% – a substantial risk for human lives. Doctor Artie Shen from New York University explained that AI synthesizes information based on word probability, lacking a foundation in practical reasoning. This often leads users to receive diagnoses without understanding the true nature of their problem.

Doctor Truong Huu Khanh, Vice President of the Ho Chi Minh City Infectious Diseases Association, acknowledges ChatGPT as a smart, useful product that can assist medical professionals in finding simple general information as a reference. However, he emphasizes that it cannot replace doctors in examination and treatment.

For instance, patients exhibiting symptoms like fever, cough, vomiting, or jaundice require examination and diagnosis by a doctor; ChatGPT cannot self-diagnose or provide conclusions. Furthermore, many diseases present with varying symptoms and can rapidly progress from simple signs. Only doctors can detect and manage these promptly through clinical sensitivity and specialized knowledge. "Two doctors examining one patient can still reach different conclusions; with more time, a thorough examination can reveal more symptoms, leading to more targeted treatment," Mr. Khanh said.

Moreover, one of medicine's core elements that AI cannot replace is empathy. Doctor Khanh cited the example of terminal cancer patients who require palliative care and psychological support. In such situations, a doctor's understanding and compassion are crucial, helping patients find peace in their final days. This is something an inanimate machine can never achieve.

Concurring with this view, clinical psychologist Master Vuong Nguyen Toan Thien, Professional Director of Lumos Center, stated that while AI is intelligent, it lacks genuine emotions. Based on programmed data, AI responds at a basic level, suitable for predefined scenarios, but cannot simulate interpersonal relationships.

The expert also pointed out that AI can provide assessments based on clinical tests but cannot feel or understand users' deep-seated suffering. This is particularly dangerous for individuals with severe anxiety disorders, depression, or psychological trauma, as AI lacks the capacity to handle complex issues.

Another significant risk is the increasing reliance on AI, which can lead users to delay seeking help from doctors or specialists. This delay poses risks such as missing crucial diagnoses, causing conditions to worsen, and in many cases, becoming more difficult to treat.

Doctors advise patients to consider AI only as a tool for general information retrieval or record organization, and absolutely not for self-prescribing or arbitrarily stopping treatment regimens. When unusual symptoms appear, visit a specialized medical facility, because the convenience of technology is never worth the safety of one's life.

"Medicine is the science of individualization and compassion, where the ethical responsibility of doctors plays a decisive role that machines cannot replicate," the expert stated.

My Y